Research

My current work focuses on AI for software engineering. The SWE-agent project has been the first open source system to demonstrate how modern language models can effectively utilize tools to fix complex repository-level tasks (as measured on the SWE-bench benchmark). SWE-agent EnIGMA showed that the same system with different tools can set state of the art performance for red-teaming cybersecurity application.

Our most recent project, SWE-smith, is a novel pipeline for generating software engineering training data at scale and allowed us to set state of the art performance for open source models on the SWE-bench Verified benchmark.

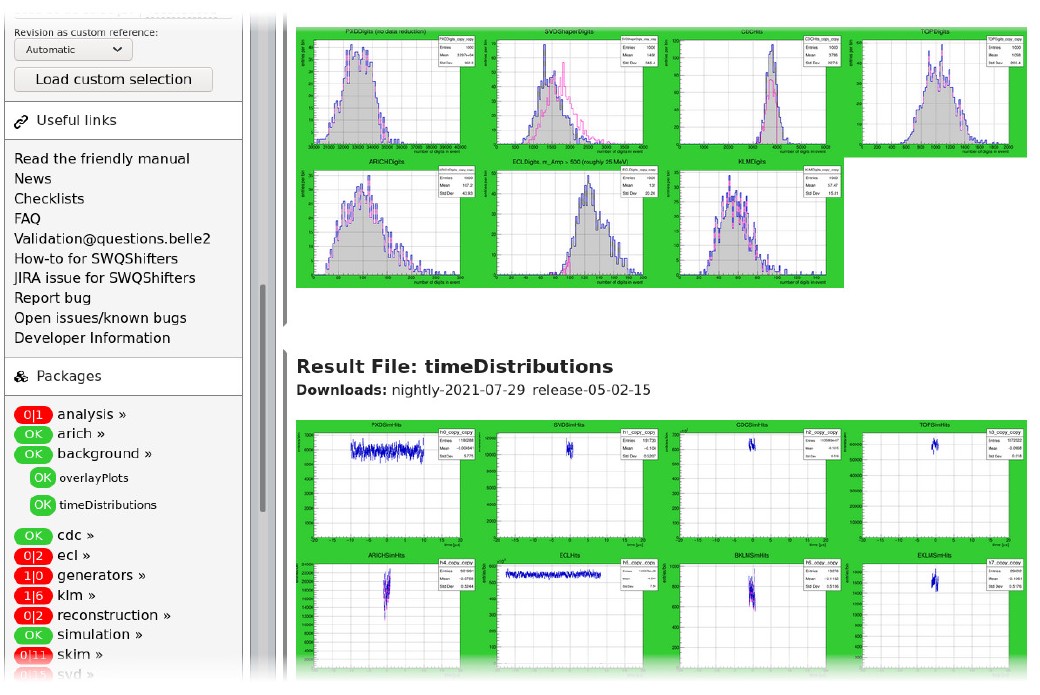

I also continue to support research into Graph Neural Networks for High Energy Physics, research I started as a Postdoc at Princeton University.

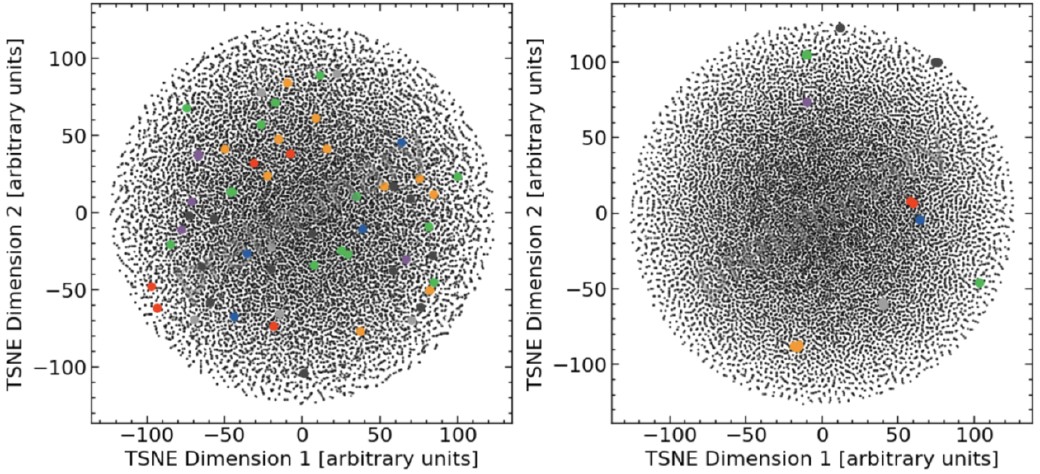

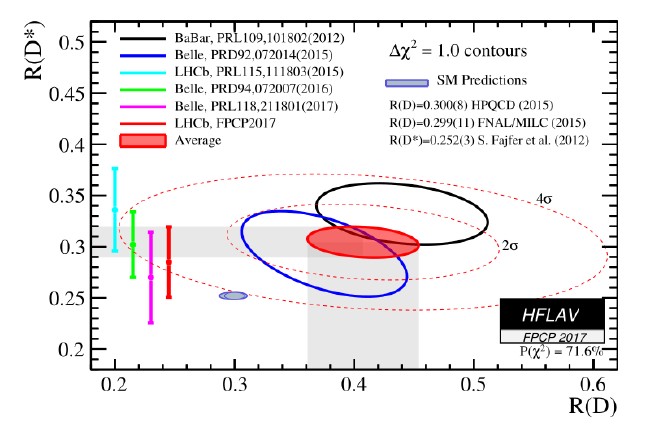

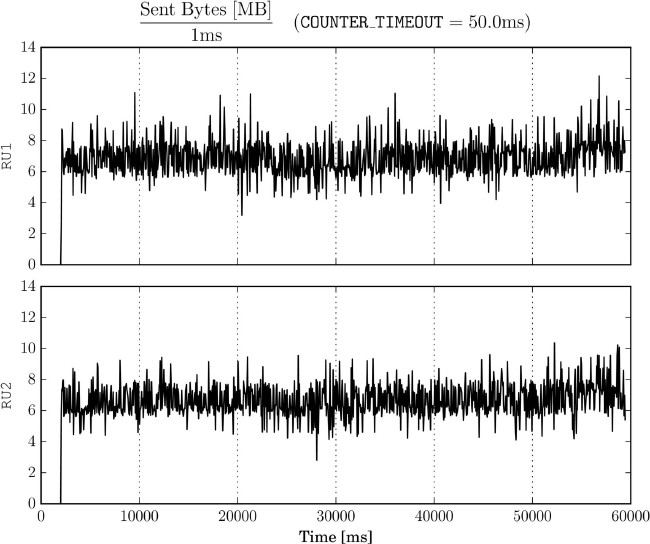

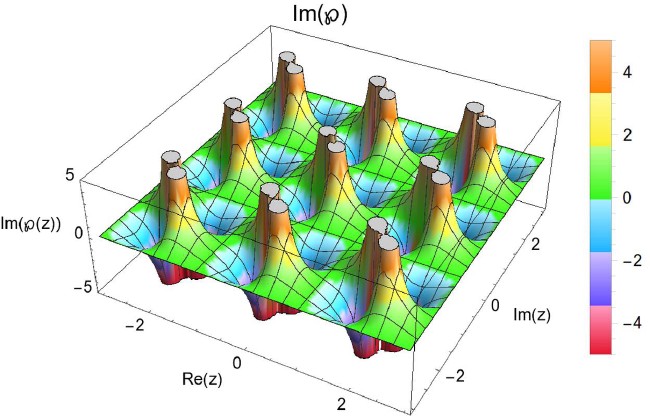

During my PhD and studies, I focused on various aspects of data analysis, software engineering, maths and physics, including calibrating machine learning algorithms, clustering analyses, integration testing, anomaly detection, differential equations, data acquisition simulations, supersymmetry, and elliptic functions.

Recent News

SWE-agent: Autonomous Software Engineering

In early 2024, most software engineers were using large AI models as chatbot assistants, answering questions or generating small pieces of code on request. In addition, smaller language models were powering code autocompletion tools in code editors, speeding up the writing of new code.

However, software engineers spend most of their time not writing new code, but rather fixing bugs and implementing features in existing codebases, often comprising tens or hundreds of thousands of lines of code. This means that a large amount of time is spent understanding the codebase, finding the correct place to make changes, and making small-scale modifications. The simple AI tools of early 2024 were not well-suited to this task.

To address this gap, we built SWE-agent. Mimicking the workflow of human engineers, SWE-agent uses tools to perform tasks, working incrementally toward complicated goals. In order to autonomously fix bugs and implement complex features in large software repositories, SWE-agent takes time to navigate the codebase, read select files, and finally make modifications, before testing and validating the changes.

SWE-agent was the first open-source system to score significantly on the SWE-bench benchmark that assesses the performance of AI systems on real-world software engineering tasks. Since the initial release in April 2024, SWE-agent has continued to evolve and regularly ranks at the top of the SWE-bench leaderboard while maintaining a lightweight and accessible design.

SWE-agent enables your language model of choice to use tools to fix issues in real GitHub repositories, find cybersecurity vulnerabilities, or perform any custom task.

It was the first open-source system to significantly score on SWE-bench, far outperforming RAG baselines and creating a breakthrough for agentic AI in software engineering.

Released just days after the commercial equivalent project Devin showed its first public demo, we demonstrated that a simple open-source system with optimized agent tooling could perform similarly (if not better) than a well-funded company's demo, democratizing access to AI-powered software engineering capabilities.

The central innovation discussed in our paper is the design and optimization of the agent-computer interface (ACI) that allows the language model to effectively navigate, understand, and modify large codebases. This includes custom shell commands, file editing interfaces, and feedback mechanisms.

Since the initial release in April 2024, development has never stopped, and SWE-agent still regularly ranks at the top of the SWE-bench leaderboard while maintaining a lightweight, modular architecture that makes it easy to extend and customize for different use cases.

CodeClash: Benchmarking Goal-Oriented Software Engineering

LMs have gotten pretty good at solving GitHub issues. But real software development isn't a series of isolated tasks. It's driven by goals: Improve user retention, increase revenue, reduce costs. We build to achieve outcomes, not to close tickets. What if AI evaluations reflected this dynamism of real-world software development?

In CodeClash, LM agentscompete via their codebases across multi-round tournaments to achieve high-level goals. In every round, agents can get to improve their codebase as they see fit. Write notes, analyze past rounds, run test suites, refactor code—whatever helps. Then, they face off against an opponent in one of multiple arenas.

CodeClash shows how far AI agents are still from being able to lead software development. Not only are they inferior to human baselines, but our paper reveals various ways in which models produce messy codebases, hallucinate about past rounds, and fail to work towards a higher-level goal.

SWE-smith: Scaling Data for Software Engineering Agents

In order to train language models to be good software engineers, we need large amounts of high-quality training data — examples of software bugs and what steps were taken in order to fix them. But collecting such data is difficult: it often requires hours of manual work and complex setups that are hard to scale.

That's where SWE-smith comes in.

SWE-smith is a system that automatically creates realistic training data for AI agents that work with code. Given any existing software project, it builds a runnable version of the code and then generates hundreds or thousands of small tasks — for example, by artificially introducing a bug. We can then use existing language models to attempt to fix these bugs, keep only the successful attempts, and then use this data to train a new language model.

We used SWE-smith to generate over 50,000 tasks from 128 popular open-source repositories and trained SWE-agent-LM-32B, using this data. This language model achieved the top scores among open-source models, and outperformed GPT-4o on SWE-bench Verified, a benchmark that tests AI agents on real-world software engineering tasks.

Despite recent progress in Language Models for software engineering, collecting training data remains a significant pain point. The procedures to curate such datasets are often complex, necessitating hundreds of hours of human labor; companion execution environments also take up several terabytes of storage, severely limiting their scalability and usability.

To address this pain point, we introduce SWE-smith, a novel pipeline for generating software engineering training data at scale. Given any Python codebase, SWE-smith constructs a corresponding execution environment, then automatically synthesizes 100s to 1,000s of task instances that break existing tests in the codebase. Using SWE-smith, we create a dataset of 50k instances sourced from 128 GitHub repositories, an order of magnitude larger than all previous works.

We train SWE-agent-LM-32B, achieving 40.2% Pass@1 resolve rate on the SWE-bench Verified benchmark, outperforming GPT-4o and setting state of the art among open source models. We open source SWE-smith (collection procedure, task instances, trajectories, models) to lower the barrier of entry for research in LM systems for automated software engineering.

SWE-bench Multimodal: Do AI Systems Generalize to Visual Software Domains?

SWE-bench has become the industry-standard evaluation framework for benchmarking autonomous software engineering agents, utilized extensively by all major language model providers. It presents language models with real-world software engineering tasks drawn from GitHub repositories, challenging them to resolve complex issues requiring deep codebase understanding and reasoning beyond typical code generation.

I was particularly involved with our follow up project, SWE-bench multimodal. SWE-bench multimodal generalizes the SWE-bench framework to typical frontend engineering tasks, shifting focus from Python to Javascript and requiring visual understanding in addition to reasoning and agentic abilities. Agents evaluated under this new benchmark must effectively interpret images provided within task descriptions and use visual feedback during issue resolution and validation processes. As a result, current state-of-the-art models continue to find this benchmark exceptionally difficult, successfully solving fewer than 25% of the included tasks.

Enigma: Interactive Tools Substantially Assist LM Agents in Finding Security Vulnerabilities

Proactively identifying and resolving cybersecurity vulnerabilities through red-teaming exercises where systems are tested from an attacker’s perspective is critical to securing modern infrastructure. However, attack vectors are diverse and require a broad set of skills, tools, and knowledge, making them very challenging to execute for AI systems.

We build on the generalist capabilities of SWE-agent to create EnIGMA, an AI agent equipped with various cybersecurity tooling. In particular, we enable the agent to use interactive terminal applications, including debuggers and real-time server interactions. Evaluated across leading Capture The Flag (CTF) cybersecurity benchmarks including NYU CTF, Intercode-CTF, and CyBench, EnIGMA sets new state-of-the-art standards, significantly outperforming existing approaches and marking a notable leap forward in agent-driven cybersecurity.